GroupWise Mobility Service (GMS) Best Practice Guide

Relevant to GroupWise Mobility versions 18.3.1 and higher (18.3.1, 18.4.x, 18.5, 23.4, 24.1, 24.2)

GMS 24.2 - Released Apr 9, 2024

New Features: Ability to view attendee status for appointments on same GMS system as organizer; improved mCheck recovery of missing items; fixes to customer-reported bugs. Additionally, some changes were made to how GMS is tied into Python that caused problems when performing SLES or GMS patches. The GMS Python modules utilize Python 3.11 and are no longer dependent on the SUSE Python libraries installed and used by various components of the Operating System.

GMS 24.1 - Released Jan 17, 2024

Updates the Django and Python libraries to currently supported versions – 4.2 for Django and 3.11 for Python. Django and Python no longer will provide as-needed security fixes and support to previous versions of their libraries. GroupWise Mobility Service 24.1 must be run on SLES 15 SP4 or later due to these changes. Upon installation of GroupWise Mobility Service 24.1, SLES 15 SP4 or later will auto-enable the needed Python 3.11 extension. (Please don't upgrade GMS to version 24.1 if your SLES 15 SP4 server is not first fully patched from the SUSE channel.)

GMS 23.4 - Released Oct 25, 2023

GMS 23.4 added a few new features as well as rebranding from Micro Focus to Open Text.

- Rebranded from Micro Focus to Open Text.

- Notify User when Mobility Account is Provisioned.

- Generate CSV List of Inactive Users.

GMS 18.5 - Released May 23, 2023

GMS 18.5 was mostly bug fixes.

GMS 18.4.2 - Released Dec 6, 2022

GMS 18.4.1 - Released Jun 22, 2022

GMS 18.4 was mostly bug fixes and a few feature enhancements. Here's an overview.

- MCHECK was dramatically improved for usability and administrative functionality. Prior to this release, MCHECK was practically useless. It's important to note that MCHECK was never much of an app due to the widely popular use of the DSAPP utility, which is now deprecated. Now that DSAPP will not even run with GroupWise Mobility, MCHECK is the only tool that is supported, and it's feature set has been dramatically improved.

- Support for TLS 1.3, also some considerations for certificates and certificate verification related to the GroupWise 18.4 release.

- Support for BTRFS and XFS file system. (Still recommends EXT4)

GMS 18.3.2 - Released Sep 22, 2021

Mostly bugfixes and documentation updates.

GMS 18.3.1 - Released Jul 28, 2021

GMS version 18.3.1 brought significant architectural changes to the underlying Python code that runs GMS. Additionally, there were some other major changes that affected the provisioning and authentication options available to a GroupWise administrator. Here is a summary of the major changes that were introduced:

- Python version 3

Earlier versions of GroupWise Mobility were built on Python version 2. This became a bigger and bigger problem when Python 3 became the DeFacto standard on SLES15. With the release of GroupWise Mobility 18.3.1, Python 2 was abandoned completely and fully built with Python 3. - Starting/Stopping GroupWise Mobility

Changes to the way you start and stop GroupWise Mobility:- You no longer have the ability to start/stop the agents from the GMS dashboard.

- The way you stop the agents on the command line has also changed.

- Provisioning and Authentication via LDAP Groups & GroupWise Groups

Only GroupWise "Groups" and "Users" are supported for user provisioning and authentication. LDAP is no longer an option for either. - SSL Certificates

SSL Certificates have moved locations and been renamed. - Upgrades from Older Versions

You cannot upgrade an older GroupWise Mobility to version 18.3.1 or higher. It's not supported and the code will not let you do it. You must install a new server. Note: Yes I have tried to get around this. No, it doesn't work and it's not worth it.

Upgrades & Patches to GMS servers

I added this section to address the questions and possible challenges you may have when the time comes to apply updates or patches. Over the years, some of the biggest problems I've run into were a result of a problematic update process either on the Linux or the GMS side of things. It's difficult to quantify every possible scenario or potential issue. So instead, I'm providing some rule of thumb guidelines for the different types of updates you may need to do.

The 3 Different Patching Needs

There are 3 different things you'll inevitably need to patch on a GMS server. They are outlined in more detail below but include:

- SLES 15 updates from the SUSE Channel

- GroupWise Mobility patches or updates to a newer release.

- SLES 15 Major Service Pack Releases (For example, SP4 upgrading to SP5)

SLES 15 Updates from the SUSE Channel

These are minor updates, and it's common to have hundreds of patches available after only a few months. These updates are typically applied via the "zypper up" or "zypper up -t patch" command (although there are other options).

- Perform the updates during a defined maintenance window. Shut off the GMS services and take a snapshot before applying, just in case GMS services don't load correctly after the update.

- It's generally frowned on to leave GMS services running while doing updates during full production. It's very likely the system will stop syncing mail. Yes, it does depend on which modules are being patched, but you don't really ever know 100% for sure what modules will cause GMS to stop working.

- When applying patches from the channel, you need to reboot the server immediately after the update. Do not try to keep the system online until later. It is likely that GMS won't be operational until you reboot.

- Generally speaking, I recommend NOT configuring automatic updates in SLES on a GMS server. This is because the updates could cause GMS to stop functioning and you won't know why.

GroupWise Mobility Service Updates and Patches (From GMS 18.3.1 and higher)

GMS 24.1 and GMS 24.2 Require SLES 15 SP4 or higher due to Python and Django library changes, and I strongly recommend going a step further. Please ensure that ALL SLES patches are installed from the SUSE channel prior to upgrading GMS to version 24.1 or 24.2.

The process to update GMS is the same for all GMS versions 18.3.1 and higher. Using the full media, the installation process updates all of the code and performs all necessary updates. The process is relatively painless in most cases. The process goes like this:

- Save the GMS media file (something like groupwise-mobility-service-xx.x-x86-64.iso) to a location on the Linux file system where GMS is running.

- Mount the ISO image to the file system.

- mhcfs03 # mount -o loop groupwise-mobility-service-xx.x-x86-64.iso /mnt

- This command mounts the ISO CD image to the /mnt folder. That is where the installation will run from.

- Run the installer from the /mnt folder.

- mhcfs03:/mnt # ./install.sh

- Accept the license agreement.

- Generally, step through the various options until finished. Note the following:

- Perform air gap install? (yes/no) [no] (Generally unless you have very specific reason to do so, you do not need this)

- You'll be prompted to shut down GroupWise Mobility and update the service. Go ahead and allow that.

"The update process may take some time. During this process, the GroupWise Mobility Service will be shut down. Are you sure you want to update the GroupWise Mobility Service now? (yes/no):" - You'll be prompted to reset the log levels to INFO. I generally leave the logs alone but it's very situational.

Reset log level for all GroupWise Mobility Services to Info? (yes/no) [yes]:no - You'll be prompted to enable anonymous info to be sent to Open Text. Pick your preference here.

Enable anonymous information to be automatically sent to Open Text to improve your GroupWise mobile experience? (yes/no) [yes]:

- GMS will restart after the update.

- If you have any problems during the update and it aborts, you will have to resolve the issue and start over.

Major SLES Service Packs (ie SLES 15 SP5)

These can be done offline using an ISO image, but I generally use the "zypper migration" command to perform an online upgrade. This process works and it's my go-to method for service packs. Note the following considerations:

- Perform the updates during a defined maintenance window. Shut off the GMS services and take a snapshot before applying, just in case GMS services don't load correctly after the update.

- Before applying a major service pack, I always apply all available updates from the channel. Follow the process as outlined above for how to navigate that process with GMS.

- If part of your plan is to upgrade GroupWise Mobility (in place upgrade), do that BEFORE applying the SLES Service Pack. There are many different reasons why I suggest this, the reasoning doesn't make a lot of sense, nor is it easy to explain. The main reason is that the newer GMS build will patch some bugs that could be problematic when doing a major SLES OS patch. If you are not planning on updating GMS, I would strongly suggest reconsidering that if you are not already on the latest GMS version (meaning GMS v23.4 or newer).

Functionality Test after Patches/Upgrades

Regardless of what type of patch or update was applied, these are the general checks you should perform afterwards to confirm that the system is functioning correctly.

- After the system boots up again, confirm that all GMS services are running at the command line. Do this via the "gms status" command:

- mhcfs03# gms status

- Login to the GMS dashboard (https://xx.xx.xx.xx:8120) and confirm that everything is functional as expected:

- Ensure that the "GroupWise Sync Agent" and the "Device Sync Agent" both show as "running" on the Home page of the dashboard.

- Make sure you can see statistics on the Dashboard. You should see numbers and status on each of the indicators. If it's blank, GMS has a problem.

- Look at your user list and confirm that all users are listed as expected.

- Review any Agent Alerts, especially RED ones and take any appropriate action.

- Run an MCHECK General Health Check and address any issues reported.

- Check mail flow from devices. Confirm that you are able to send and receive mail correctly.

- Watch for any critical GMS alerts that show up in the GMS dashboard and handle them accordingly. Note the date/time stamp of the alert, sometimes the alerts are old and unrelated to what you're doing at the moment.

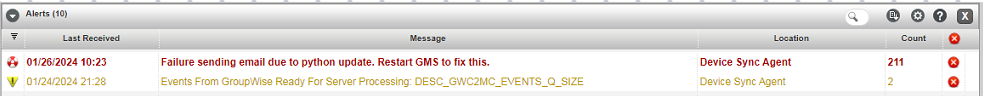

- Specifically keep an eye on the GMS dashboard for this alert. You may not see it immediately. Sometimes you won't see it for a couple hours. It just depends on what people are doing on their mobile devices. The reason for this alert is that a SLES update overwrote one of the Python modules that GMS needs.. GMS detected that this happened, and corrects it by copying in the correct module. However, you must restart GMS for it to take effect. With GMS 18.5 and higher this is very common to see after any SLES patches or updates are applied. Older versions of GMS did not generate this alert.

Specifically keep an eye on the GMS dashboard for this alert. You may not see it immediately. Sometimes you won't see it for a couple hours. It just depends on what people are doing. The reason for this alert is that a SLES update overwrote one of the Python modules that GMS needs.. GMS detected that this happened, and corrects it by copying in the correct module. However, you must restart GMS for it to take effect. With GMS 18.4.1 and higher this is very common to see after any SLES patches or updates are applied.

Contingency

If GMS is in a state of failure and it does not appear to be an easy or quick fix after any update, consider reverting to the snapshot unless you can easily identify the issue. It's likely a python related issue and there are some TIDs that discuss them. However, it's not always cut and dry.

Version Upgrades from older GMS to GMS 18.3.1 and higher

If you're running an older version of GMS such as GMS 14.x, 18.0, 18.1, 18.2, or 18.3.0, you cannot upgrade. To get to GMS version 18.3.1 or higher, you must build a new server. There is no upgrade path and there is no migration path. Use the rest of this guide to help deploy a new server.

System Build Recommendations

VMWARE Options

Disk Controller:

- Disk Controller 1: Paravirtual

- Disk Controller 2: Paravirtual (Optional)

- Disk Controller 3: Paravirtual (Optional)

Using separate controllers isolates the Operating System disk activity from GroupWise Mobility, improving performance. Paravirtual is the best option for performance because it is working much closer to the hardware than other options.

** Do not install SLES using a different controller, then change it to Paravirtual. This will likely render the server unbootable and the disk partitions may not be read the same. **

Disk Configuration

Choose Thin or Thick disks based on the analysis below.

Note the following: On a small system (up to around 50 users), you probably do not need to worry about using multiple disks. A single partition will likely work fine. However, if you get into larger systems with more data, you will benefit from splitting off the GMS data to a separate partition. There are some considerations to this strategy because there are multiple directories where data is stored, and it's not always easy to know how much space is needed, and where.

- Disk 1: 100GB / Assign to the first controller (Disk 0:1)

- Disk 2 (GMS Data, Optional): Allocate enough space for GroupWise Mobility data plus 25-30% for growth.

- Assign to the second controller (Disk 1:1).

- Choose the partitioning type:

- Thin partitioning is adequate in many cases, especially in small to medium systems.

- Thick-provisioned eager-zeroed disk partitioning is better for systems with larger user bases and heavier demands.

- If you have different tiers of storage (fast, slow, near, far, SSD, spinny disks, whatever), put the VMDK file in a Datastore that features the fastest storage available.

- Disk 3 (Logging Disk, Optional): 50GB.

- Assign to the third controller (Disk 2:1).

- Setup the partitioning type the same as disk 2.

If you have a larger and heavily utilized system, and squeezing every last bit of performance out of the drive is critical to a happy user base, consider the following:

- A thick-provisioned eager-zeroing disk will write data faster than a thin-provisioned disk.

- A thin-provisioned disk exhibits the same performance as a lazy-zeroed thick-provisioned disk. Because of overprovisioning, thin provisioning will cause problems when users approach their maximum storage capacity.

Boot Options / Firmware

In VMware, under the VM Options --> Boot Options, there is an option called Firmware. You can choose either "BIOS" or "EFI". I don't have a hard preference, and both will work fine. There are pros and cons to each one. But this setting is one of those things you don't want to change after you've set it one way or the other. I've never seen a difference from an OS or GroupWise functionality standpoint using one or the other.

BIOS

Pros: It's generally more familiar and I would say simpler to work with. Disk partitioning is very straightforward and simple. With the BIOS setting, you are able to use standard disk tools to manipulate and resize partitions (if you need to expand at some point).

Cons: It is a legacy setting.

EFI

Pros: EFI is the standard moving forward and offers some functionality and security not available to the BIOS setting.

Cons: The current toolsets on Linux that support EFI are extremely limited compared to what's available with a standard BIOS. For example, if you ever need to resize a partition, the tools are not as available using EFI. **This also could depend on your filesystem, partitioning type, and how you approach it, ie with a bootable offline tool like gparted, or with native OS tools while the system is online and running.

Note that the partitioning you choose will vary depending on which option you choose here.

Server OS Selection

I generally use the latest version of SLES 15 available when installing GroupWise Mobility. The only supported OS's for GroupWise Mobility at the moment are:

- SLES 15 SP4 (Worked well with GMS 18.3.x and 18.4.x. Sometimes problematic with GMS 18.5)

- SLES 15 SP5 (Released on June 20, 2023. Works well with GMS 18.5 and higher. )

SLES 15 SP5 was released on June 20, 2023. I use SLES 15 SP5 for ALL GMS implementations at the moment. There is no reason to use any earlier version of SLES 15.

** UPGRADE NOTE **

- Can you upgrade an older version of GMS (14.x, 18.0, 18.1, 18.2, 18.3.0) to version 18.3.1? No. Build a new server.

- Can you upgrade an older version of GMS (14.x, 18.0, 18.1, 18.2, 18.3.0) to any newer GMS version such as GMS 18.5 or GMS 23.4/24.1/24.2? No. Build a new server.

- Can you upgrade GMS version 18.3.1, GMS 18.4.x, or GMS 18.5.x to GMS 23.4, GMS 24.1, or GMS 24.2? Yes you should be able to as long as your SLES server is running a supported SLES version.

File System Selection and Partitioning

SLES 15 Defaults to a BTRFS. Do not use this. Instead, change the partitioning to EXT4. This is required per the documentation.

-

Specify only EXT4 as the GMS server’s File System: SLES 15 SP2 and later default to BTRFS and you must manually change this to EXT4.

Additionally, note the following:

- The SLES 15 installation tries to partition the /home partition using half of the available disk space. It's important to NOT use the defaults for anything. Otherwise, you will waste a massive amount of space since nothing on Mobility uses the /home folder. Start the partitioning from scratch and define your own partitions.

Note About GMS 18.4 and BTRFS.

With GMS 18.4 there is now some support for the BTRFS file system. Reference this statement in the GMS 18.4 release notes below. However, I personally prefer to NOT use BTRFS and do not trust that it is stable for use in a production GMS system.

Load Script Support: Load scripts now run on the BTRFS and XFS file systems. However, EXT4 is still the strongly recommended file system for GMS.

Separate Disk/Partition for GMS Data

If you utilize a 2nd partition for GMS data, it is important to understand the storage structure of GMS and where the data is kept. Per the documentation:

The largest consumers of disk space are the Mobility database (/var/lib/pgsql) and Mobility Service log files (/var/log/datasync). You might want to configure the Mobility server so that /var is on a separate partition to allow for convenient expansion. Another large consumer of disk space is attachment storage in the /var/lib/datasync/mobility/attachments directory.

Typically, with a 2nd disk, I mount it to the /var directory. This will put /var and all subfolders on the 2nd disk, including non-gms files located in /var. This is typically fine.

Possible 3rd Disk for Logging

The one "possible" downside of mounting disk2 to /var is that both gms data and all server logging (including GMS logging) will be on the same disk. There is potential for the partition to fill up due to the amount of logging that could be done by GMS (especially if you're in diagnostic logging mode). On very large systems I sometimes create a 3rd disk and mount it specifically to /var/log. That will prevent any excessive logging from affecting the GMS services.

All about SSL Certificates

SSL Certificates Name and Location

SSL Certificates for GroupWise Mobility have been renamed and moved to a new location with GroupWise Mobility 18.3.1 and beyond:

This information is from the developers about each file and what it's purpose is:

- The gms_server.pem is used internally by GMS and should NOT be replaced with your public certificate that is used for Devices & Webadmin.

- The gms_mobility.pem is used by the Devices and Webadmin and should be replaced with a public certificate.

- The gms_mobility.cer is rarely used but is generated from the gms_mobility.pem. It is mostly for backward compatibility with older devices that require the manual addition of the certificate. It is accessed via the user login of the webadmin.

My understanding from the developers is that with Python3 they were able to simplify the certificate implementation. This is a result of that simplification even though it does seem more complex.

3rd Party Trusted SSL Certificates

It's nearly impossible to use self-signed certificates anymore. Most phones require trusted certs in order to communicate. I am not going to go into detail about how to generate the certificates, but this shows you what you need to do to take your existing 3rd party certificates and configure them on a GMS server. This is what you'll need:

- The private key file (That you generated when you created the CSR to submit to your SSL vendor)

- Also of importance is to note that GMS requires that the Private Key file does NOT have a password on it. This is the exact opposite of what is required by the GroupWise Agents.

- The server certificate (Generally .crt file) from your SSL vendor

- The SSL Vendor's intermediate SSL Certificate.

Combining All Certificate and Key Files into a single PEM file

GMS requires that all of your certificate files are combined into a single PEM file. You basically concatenate all files together using the sequence below. Please note that you will need to use the name of your specific files, not the samples I have used.

# cat passwordless.key > gms_mobility.pem (Ensure that you are using the Key File that does NOT have a password on it)

# cat server.crt >> gms_mobility.pem (This is the certificate provided by the SSL vendor)

# cat intermediate.crt >> gms_mobility.pem (This is the Intermediate certificate provided by the SSL vendor)

# cp gms_mobility.pem /var/lib/datasync/

# gms restart

Reference TID from Micro Focus

This Micro Focus TID is not completely accurate for GMS 18.3.1+, but the general process is the same and it does go into more detail about the entire process. Note the file name and location changes that I have outlined. How to configure Certificates from Trusted CA for Mobility (microfocus.com)

Starting / Stopping / Enabling / Disabling GroupWise Mobility

The only way to start and stop GroupWise Mobility services is from the command line. It is no longer possible to do this from the Mobility Dashboard. When asking why the dashboard change, I was provided the following explanation from the developers:

"When GMS was converted to run on python 3, there was a significant change in how the connectors (or sync agents) were loaded during startup. We found that in the python 2 days of GMS that often simply starting/stopping the agents from the web admin did not always work in the way that it should (i.e. an agent that was in status "Stopped" would shortly return to the "Stopped" state again after it was started via the web admin control). For this reason, we decided that it was best to just remove this half-broken functionality from the web admin."

| Function | Command |

|---|---|

Start GroupWise Mobility |

gms start |

Stop GroupWise Mobility |

gms stop |

Show GroupWise Mobility Status |

gms status |

Restart GroupWise Mobility |

gms restart |

Enable GroupWise Mobility (enable GMS to start automatically with system) |

gms enable |

Disable GroupWise Mobility (prevent GMS from starting automatically with system) |

gms disable |

Troubleshooting GMS

Everything below this point relates to the general troubleshooting of a GroupWise Mobility system. Many issues you may experience are related to performance issues and resource exhaustion. The tips below are things I do to squeeze more performance out of my systems.

GMS Troubleshooting Tool: MCHECK

GroupWise Mobility has a tool called MCHECK that is used to troubleshoot a variety of different issues related to the day-to-day operation of a GMS system. If you are having any problems with GMS, a good rule of thumb is to run some basic tests with MCHECK and see if any problems are reported.

Documentation: Refer to the link below for product documentation:

https://www.novell.com/documentation/groupwise24/gwmob_guide_admin/data/admin_mgt_mcheck.html

NOTE: The tool DSAPP that was used by older versions of GroupWise Mobility is deprecated and will not work on current versions of GroupWise Mobility. MCHECK is fully supported and actively developed with new features and options in each new version of GMS. Trying to run DSAPP will leave you frustrated and angry, even if TID's you're reading make reference to it and sound like it should work.

MCHECK - General Health CheckMCHECK has quite a few different options and overall is pretty useful. I do not go into extensive detail on all of the options here (refer to the documentation). However, the most useful check is the "General Health Check" because of the number of different checks it runs in a single operation. The General Health Check runs and displays a series of tests on the GMS server. After all the checks are run, you can view more detailed information about each check in the mcheck log file. Launching MCHECK & Running a General Health CheckMCHECK is a command line tool that presents you with a text base menu. It is a python application and uses python to launch. The easiest way to run it is by changing to the folder where the MCHECK tool is located and running it from there.

MCheck (Version: 24.1) - Running as root Select option: |

- Choose "4. Checks & Queries" from the menu

- This will bring you to the Checks & Queries Submenu where you will see the "General Heatlh Check Option"

MCheck (Version: 24.1) - Running as root

--------------------------------------

Checks & Queries

1. General Health Check

2. GW pending events by User (consumerevents)

3. Mobility pending events by User (syncevents)

4. Generate csv list of inactive users

0. Back

Select option:

- Choose "1. General Health Check". This will start the process and generate the results as shown below:

===Running General Health Check===

--------------------------------------

Checking Mobility Services... Passed

Checking Trusted Application... Passed

Checking Required XMLs... Passed

Checking XMLs... Passed

Checking PSQL Configuration... Passed

Checking Proxy Configuration... Passed

Checking Disk Space... Passed

Checking Memory... Warning

Checking VMware-tools... Passed

Checking Automatic Startup... Passed

Checking Database Schema... Passed

Checking Database Maintenance... Passed

Checking Reference Count... Failed

Checking Databases Integrity... Failed

Checking Targets Table... Passed

Checking Certificates... Warning

The remaining checks may take a while.

Please be patient...

Checking Disk Read Speed... Passed

Checking Server Date... Passed

Checking RPMs... Passed

Checking Nightly Maintenance... Passed

Press Enter to continue

MCHECK Log Files & Viewing Problems Found

The log files for MCHECK provide more detail about the issues that are reported. Since the checks cover a lot of different things, it's difficult to outline every possible scenario here. The best thing to do is look at the logs, view the detailed information about any specific issue, and determine how to proceed from there. If the issue is over your head or beyond your comfort level, don't hesitate to reach out. The MCHECK log files are located here:

/opt/novell/datasync/tools/mcheck/logs

For the General Health Check log files, look for the file(s) "generalHealthCheck_" + Date/Timestamp + ".log". The file will be timestamped with the date and time the check was run. After you run a general health check, just check the most recent log file. Below is an example of the file listing of the log folder.

-rw-r--r-- 1 root root 0 Jul 18 2022 generalHealthCheck_2022-07-18T23:18:20.log

-rw-r--r-- 1 root root 27817 Nov 2 2022 generalHealthCheck_2022-11-02T05:40:05.log

-rw-r--r-- 1 root root 27516 Jan 4 22:13 generalHealthCheck_2024-01-04T22:12:23.log

-rw-r--r-- 1 root root 27694 Jan 14 19:45 generalHealthCheck_2024-01-14T19:44:44.log

-rw-r--r-- 1 root root 27671 Jan 14 19:48 generalHealthCheck_2024-01-14T19:47:59.log

-rw-r--r-- 1 root root 55336 Jan 14 20:21 generalHealthCheck_2024-01-14T20:19:53.log

-rw-r--r-- 1 root root 0 Jan 14 21:05 generalHealthCheck_2024-01-14T21:05:55.log

-rw-r--r-- 1 root root 27668 Jan 14 21:09 generalHealthCheck_2024-01-14T21:08:51.log

Memory Considerations

Over the years, the most common problems I've seen with GMS are related to performance. So I have spent an incredible amount of time and energy finding ways to tune and optimize the performance of a GroupWise Mobility server. 90% of the issues I run into are due to the server not having enough memory. Everybody wants to be in denial about how much RAM is really required. After all, when GMS was first introduced, it was a very lightweight application that hardly took any resources at all. This is no longer true, and GMS can be very CPU and Memory intensive depending on your system, user count, device count, and data sizes.

| From The GroupWise Documentation | From Real-World Experience |

|---|---|

|

|

Symptoms of Low RAM in a GroupWise Mobility Server

When the RAM on a GMS server is depleted, you will typically experience the following symptoms:

- Extreme latency with receiving messages on your phone.

- Phones stop syncing system wide.

- A reboot of the server immediately resolves the issue because it frees up all the RAM and starts over with its usage.

- Your SWAP space will be consumed, which can cause even more problems, especially if your server is virtualized.

Diagnosing Low Memory

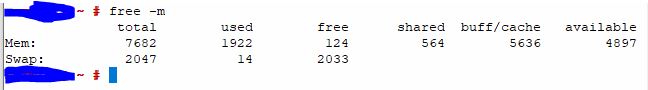

A good way to determine the memory status on your GMS server is by using the "free -m" linux command as shown below:

Analysis

You can see that this server has 8GB Ram (7682MB). For reference, this system is a very small system with only 15 users on GroupWise Mobility. If the customer were to tell me that they were having problems syncing phones, I would look at this and most likely increase the RAM to 10GB. Here are a few items I look at:

- Free: This shows 124MB free out of 7682MB. That is pretty low free space.

- Swap: Out of a 2GB swap space, 14MB has been used. The fact that swap space has been used at all tells me that it needs more RAM. In a perfect world, I want to avoid using swap space (That is a discussion for another day).

- Uptime: Uptime stats for this server: 19:36:20 up 73 days 9:13, 1 user, load average: 0.36, 0.59, 0.61

Because the server has been up for 73 days, I can be confident that the Memory usage has normalized and I'm not being influenced by a server reboot. Having a server that's been up for a while gives me more confidence in my memory stats and analysis.

CPU Considerations

GroupWise Mobility relies heavily on the PostgreSQL database. Databases in general can be CPU intensive. CPU requirements go up with user count, device count, and data sizes.

| From The GroupWise Documentation | From Real-World Experience |

|---|---|

|

4 Cores are generally adequate on a smaller system, but may not be practical at all on larger systems with hundreds of users. What you will find is that your Mobility Server can run very high CPU utilization with average day-to-day usage. Adding CPU's can help with this situation and help the system run better. The biggest consumer of the CPU is the PostgreSQL service. |

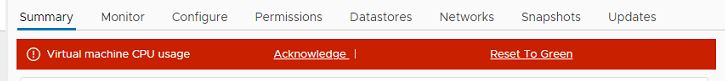

Symptoms of an overburdened CPU in a GroupWise Mobility Server

Ensure that your RAM allocations are adequate before troubleshooting the processor. RAM accounts for most performance problems. After you are confident you have enough RAM, note the following symptoms that could indicate the CPU allocations are too small.

- Very high CPU utilization at the console.

- Latency sending and receiving email on phones, system-wide.

- If virtualized, CPU alerts in VMWare.

The Linux "top" Command

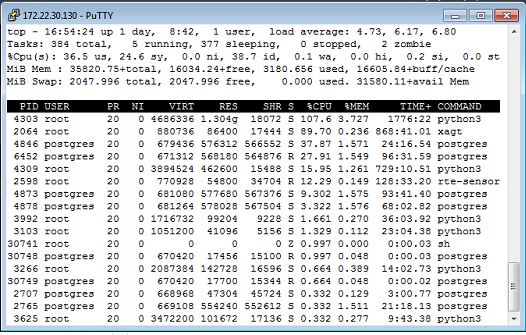

The "top" command shows the most heavily utilized processes in real-time on a Linux server. Let's take a look at this screenshot below. It outlines the heaviest processes that are hitting the CPU.

As a point of reference, this server is a very busy server. It currently has 36GB Ram assigned, 6 CPUs, and 400 users provisioned. I am troubleshooting high utilization and complaints from the customer.

CPU / "top" analysis

You can see from the results of this 'top' command that the majority of the processes are either "python3" or "postgres". GroupWise Mobility is built using Python3, and it uses the Postgres database. Therefore, you can safely say that GroupWise Mobility is hitting the CPU hard on this server. Increasing the CPU count from 6 CPUs to 8CPUs could help with performance.

NOTE: There are numerous articles available online related to the number of CPUs in a server, and research shows that more CPUs does not always mean better performance. In fact, it is argued that sometimes it could reduce performance. So when it comes to increasing the number of CPU's in a GroupWise Mobility server, I approach this cautiously. It is usually something I would change after I have exhausted all other recommendations in this article.

Misc Performance Tuning

There are five other items that can be tuned if you are not getting the performance you need out of your system. These are core Linux settings related to the performance that go away from the standard defaults. In many cases, the defaults are adequate. But if you are looking to squeeze a little more performance out of your system, these are options you can consider.

- I/O Scheduler: "none"

- File System Mount Option: "noatime"

- Linux Swappiness

- Storage Hardware / SAN Tiered Storage

- Log Level in GMS

** Note ** These are advanced system settings and you should fully understand that a misstep here could cause severe problems with your system. Please approach with caution.

I/O Scheduler: "none"

Earlier versions of SLES used a default I/O Scheduler called "noop". This was all changed somewhere around SLES 15 SP3. For the sake of this discussion, please reference this SUSE documentation: https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-tuning-io.html

Summary: The default scheduler on SLES 15 SP3 is "bfq". The desired tweak is to change the scheduler to "none".

The link above explains how to do it, however, it's not the easiest document to read. From the docs: "With no overhead compared to other I/O elevator options, it is considered the fastest way of passing down I/O requests on multiple queues to such devices."

Confirm the Current Scheduler and proper scheduler name

Before and after any changes to the scheduler, you should run a command to confirm the active scheduler. Nothing worse than making a change, and then not knowing if it worked or not. Use this simple command to determine what scheduler is active on your system.

cat /sys/block/sda/queue/scheduler (Replace "sda" with your actual disk device name(s) that GMS is using)

serverapp1:/ # cat /sys/block/sda/queue/scheduler

none mq-deadline kyber [bfq]

The item in brackets is the active scheduler. In the case of the example above, the active scheduler is "bfq". You want it to be "none".

Here is what you need to do to implement the "none" scheduler:

- Find the file /usr/lib/udev/rules.d/60-io-scheduler.rules and copy it to /etc/udev/rules.d/

- Edit the file /etc/udev/rules.d/60-io-scheduler.rules and add this line to it:

- KERNEL=="sd[a-z]*", ATTR{queue/scheduler}="none", GOTO="scheduler_end"

- Find the section that looks like this line below and put it in that section:

- # --- EDIT BELOW HERE after copying to /etc/udev/rules.d ---

- Modify the "sd[a-z]" portion as required to match your specific device names.

- Your file should look something like this:

- # --- EDIT BELOW HERE after copying to /etc/udev/rules.d ---# Uncomment these if you want to force virtual devices to use no scheduler

# KERNEL=="vd[a-z]*", ATTR{queue/scheduler}="none", GOTO="scheduler_end"

# KERNEL=="xvd[a-z]*", ATTR{queue/scheduler}="none", GOTO="scheduler_end"KERNEL=="sd[a-z]*", ATTR{queue/scheduler}="none", GOTO="scheduler_end"

- # --- EDIT BELOW HERE after copying to /etc/udev/rules.d ---# Uncomment these if you want to force virtual devices to use no scheduler

- Find the section that looks like this line below and put it in that section:

- KERNEL=="sd[a-z]*", ATTR{queue/scheduler}="none", GOTO="scheduler_end"

- Reboot the server after making this change.

**NOTE** The above line may need to be changed to accommodate the naming of your file system. The syntax uses regular expressions, so "sd[a-z]" means it will set the scheduler to "none" on any device name that falls within sd[a through z]. So for example, sda, sdb, sdc, sdd, sde... sdx, sdy, sdz. In most VMWare virtualized Linux servers, the syntax listed will work on your system. It's possible that yours will be different. If that is the case, make the appropriate modifications to that line. You can see what your device is easily by using this command:

-

df -h

This will show you a list of your devices, and you should see something like the output below. In this specific example, you can see an sda, sdb, and sdc meaning there are 3 separate virtual disks.

server2:/# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda4 45G 6.1G 37G 15% / /dev/sda3 2.0G 50M 1.8G 3% /root /dev/sdb 25G 2.9G 21G 13% /var/log /dev/sda1 511M 4.9M 507M 1% /boot/efi /dev/sdc 492G 26G 441G 6% /var/lib

File System Mount Option: "noatime"

When a linux file system is mounted, by default any time a file is read or accessed, the file is timestamped with an attribute to show that access. Generally speaking, this is unnecessary and creates added overhead and a performance hit.

Summary: Disable this behavior by adding the "noatime" attribute at system startup to your file system mount directives.

This is done in the /etc/fstab file.

gmsserver:~ # cat /etc/fstab

UUID=fa9c8105-065f-4dfa-9766-95e72cb397a5 swap swap defaults 0 0

UUID=9315ebe4-d560-4249-842f-71524c041854 / ext4 defaults,noatime 0 1

UUID=44075e18-79d8-47bb-8b0e-0e39e027a0fc /var/log ext4 noatime,data=ordered 0 2

UUID=17d4970f-a4a3-4664-9170-922cffc9989f /var/lib ext4 noatime,data=ordered 0 2

UUID=38faf7aa-0286-4fe8-925e-7517c5bf8d94 /root ext4 noatime,data=ordered 0 2

UUID=D00B-3D8F /boot/efi vfat utf8 0 2

The above shows a typical /etc/fstab file. You can see the various file systems being mounted. The place for 'noatime" is after the file system type, which you can see here is "ext4". Any mount options are placed here in the file, and multiple directives are separated with a comma. You might see a directive called "default". Or you may have some other directives such as what you see here "data=ordered". Just append that with a comma and add "noatime". When the system boots next, the parameter will take effect as the file systems are mounted.

I will add more to this section later.

Linux Swappiness

Swappiness affects how the server behaves when physical memory is depeleted and swap space has to be used. The default value on SLES 15 is 60. The goal is to change this to 1. Changing this value to 1 minimises the amount of swapping while not completely disabling it.

vm.swappiness = 1: the minimum amount of swapping without disabling it entirely

Find your current setting:

# sysctl vm.swappiness vm.swappiness = 60

Change the vm.swappiness value:

This command will put the setting into effect immediately, however it will not stick after a reboot:

# echo 1 > /proc/sys/vm/swappiness

To permanently make the change on your server, do the following:

- Edit the file /etc/sysctl.conf

- add this statement anywhere in the file:

-

vm.swappiness = 1

-

- Save the file and reboot the server.

Confirm the vm.swappiness value:

Confirm the setting is correct by issuing the following command:

# sysctl vm.swappiness vm.swappiness = 1

Storage Hardware / SAN Tiered Storage

Please put your GMS system on the fastest storage available to you. GMS is very disk intensive. A slow disk could cripple your system.

Good Options:

- SSD (Solid State Drives)

- 10K or 15K SAS drives in a fast RAID configuration

- High-performance SANs

- The fastest / highest priority storage on a multi-tier SAN

Bad Options:

- Slow SATA drives

- SAN connected to a slow iSCSI connection

- The slowest tier on a multi-tier SAN

Diagnostic Logging and Finding Problems

When you're troubleshooting GroupWise Mobility issues, having the system configured for Diagnostics logging is critical. The logs show exactly what is happening, and why. Depending on the issue, you can sometimes identify a problem on your own, but in most cases an experienced Support person or developer needs to look at them. There might be a few specific things you can look for that could be helpful. Consider the following in regard to diagnostic logging:

- Diagnostics logging is very heavy. On a busy system it can really impact system performance.

- On a busy system, Diagnostic Logging can generate millions of lines of logging in a very short time span. It's nearly impossible to read the Diagnostic logs and know what to look for. Therefore, my preference is to only turn on diagnostic logging if I need to gather the information to send to the Support team for analysis. Otherwise, turn it off and save system resources.

- The default log level for GMS is "info". This is the preferred setting for day-to-day usage. However, sometimes when you are diagnosing problem conditions, you need to set the logs to Diagnostic.

Helpful Tips about Logging

- If you're troubleshooting GMS, also ensure that your POA's are in Verbose logging mode so you can troubleshoot that side if needed.

- If restarting GMS clears up the problem temporarily, it's better to NOT restart it because that may interfere with a diagnosis. Instead, get the logs set the logs to debug, restart GMS, and then let it run a while. Then when the problem comes back, you should have a lot of good diagnostic data to work with.

- Unless you are looking for specific historical data or trends, old log files are not that useful in most cases. Sometimes it is helpful to shut down GMS, remove (or move) all the log files in /var/log/datasync (and subfolders) and get a clean start for simplicity's sake. The amount of logging is overwhelming, so a clean start makes it easier to know you're looking at the right data.

- When troubleshooting specific sync issues, it's important to have some information handy relevant to the situation:

- Date/Time of the issue or message that was sent but not synced or received.

- Subject Line of messages that might not be syncing.

- Email recipient for problematic message.

- One additional strategy you could use on a very busy system is to ensure that the gms log files are on a different disk partition than the gms data. This would balance the disk writes across different devices and theoretically minimize the hit on the primary gms data partition. The way I have outlined above in the system build recommendations, you will accomplish this by having 2 or even 3 disks. If you've built a system with a different partitioning strategy, you may have to improvise and adjust based on how it is configured. If the system is already running, completely changing the partitioning strategy is not practical or possible. But you could add another VMDK file (assuming VMware) to the server and partition it and mount it to the gms log location which is /var/log/datasync. If you already have the main GMS data partitioned separately, and /var/log is set to the system partition, then this suggestion would be less applicable.

Note About Changing LOG Settings

- Changing the log level requires a GMS restart. However, restarting GMS may temporarily fix any persistent problem you are having. Having to restart GMS to set diagnostic mode is problematic. I generally keep diagnostics logging on if I am having a problematic server, but then turn it off once the issue is resolved. Once you have changed to Diagnostic mode and restarted GMS, you may have to wait a while for the problem to come back depending on the situation.

Idle Threads

You can view the logs in real time to see how many threads are in use and how many are idle. It can be surmised that if you have only one or two idle threads, or if all threads are completely consumed, your system may be experiencing performance issues and slow sync times. Monitor the number of idle threads by following the mobility-agent.log file with this command:

mobility:~ # tail -f /var/log/datasync/connectors/mobility-agent.log | grep -i 'Idle Threads'

This will display a current view of your relevant log file and show you how many threads are idle. Here are outputs from two different servers for analysis. In the first one, you can see that there is consistently only 1 of 21 threads available. In the second one, there are consistently 12 of 20 threads available.

Server Example 1

2024-02-02 14:00:17.751 INFO [CP Server Thread-51] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 1 / 21.

2024-02-02 14:00:18.396 INFO [CP Server Thread-40] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 1 / 21.

2024-02-02 14:00:18.466 INFO [CP Server Thread-37] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 1 / 21.

2024-02-02 14:00:34.627 INFO [CP Server Thread-66] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 1 / 21.

Server Example 2

2024-02-02 14:04:02.361 INFO [CP Server Thread-56] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 13 / 20.

2024-02-02 14:04:02.669 INFO [CP Server Thread-47] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 12 / 20.

2024-02-02 14:04:03.198 INFO [CP Server Thread-43] [DeviceInterface:302] [userID:] [eventID:] [objectID:] [] Idle threads = 12 / 20.

There is a TID from Opentext that discusses this a little bit, and suggests that if you consistently have low threads, you may want to increase the threads. However they also recommend not exceeding 20 threads, which seems to be the predefined default. You can view that TID here: https://support.microfocus.com/kb/doc.php?id=7014654

If you are experiencing sync issues, and you see that your threads are exhausted, here is what I would suggest:

- Monitor the threads for a while and see if they clear up or the situation improves on its own. Just because they're low does not mean there is an issue. But it is something to be aware of.

- Restart GMS and immediately monitor the threads again to see what kind of impact that makes. Note the differences with the thread usage before and after the restart. If a situation existed where threads malfunctioned, a restart of GMS would immediately clear that up and allow the system to utilize all threads again, which will help performance.

- Note that thread info is not logged unless you have Diagnostic logging enabled in the GMS dashboard.

Mobility Agent "appears to be hung"

This will tell you if you have any threads that are actually hanging or have hung in the past. If you have threads that are hanging, this is a good reason to open an SR and get tech support involved so they can identify the cause of the hang. If threads are hanging, it will gradually bring your system to a halt as more threads will also likely hang until no more threads are available.

zgrep on the archived logs

this command will query all of the .gz files for the mobility agent. the .gz files are old copies of the logs that have been compressed. zgrep allows you to query inside them without extracting them first.

mobility: # cd /var/log/datasync/connectors

mobility: # zgrep -i "appears to be hung" mobility-agent.log*

grep on the current log file

This command will monitor the existing file and display any results, live as they happen.

mobility: # tail -f /var/log/datasync/connectors/mobility-agent.log | grep -i "appears to be hung"

Persistent Device Sync Issues & Rebuilding GMS server

*THIS IS A LAST RESORT SUGGESTION*

Sometimes you run into issues where user devices just don't sync. Or things seem stuck. Or you beat your head on the wall for 3 days trying to diagnose and troubleshoot the situation and cannot make any headway. Troubleshooting system-wide sync issues is a nightmare. There are potentially millions of lines of debug data in your log files. Trying to find the cause is nearly impossible. Yes, you can get the debug logs to Tech Support, but you're going to have at least a couple days turn-around time or more. Do you want to wait that long, or do you want to just rebuild the server and move on with your life? Back and forth with tech support can turn a situation into weeks of frustration.

The reality is, if you've covered all the other bases in this document, and you're still having issues, sometimes it's actually easier to just bite the bullet, deploy a new GMS server from scratch, and reprovision all of the users. This section is intended to help you rebuild your system with minimal time investment.

20,000 ft overview

The concept behind this process is not a typical migration to a new server. I take a slower approach with that. This is a shotgun wedding, get it done, cut out the BS. It's possible to rebuild a GMS server from scratch in 2 hours. Then you just have to wait for users to provision and you're back in business. But you need to do a little bit of prep work before you just abandon the old server. Otherwise you will have to scramble to find the stuff during and after the install. I've outlined all the items you need to concern yourself with in order to get this process turned around quick.

This assumes the following:

- You'll be keeping the same IP address and all other details of the existing server.

- You don't want users to have to change anything on their end.

- You're not going to need to make any firewall or infrastructure changes.

Preparation

Key to this strategy is having all the necessary files and other information ready for your rebuild. Here is a list of things to grab before you start. Make sure you've copied the files to your local system from the existing GMS server.

- The name of your Trusted App from the GroupWise administration console.

- GroupWise Admin Console --> System --> Trusted Applications --> Find the name of the one you use for Mobility and write it down.

- Your Trusted App File. From previous installations, you should have exported your trusted app key to a text file and then used that when you installed GMS before. You had to have this when you installed GMS in the first place. On my server for example, I use /opt/iso/mobility.txt. This could be any filename located anywhere on your existing GMS server, but you put it there so go find it.

- Your SSL certificate file. This is located at /var/lib/datasync/gms_mobility.pem.

- The latest GroupWise Mobility installation media. For example, groupwise-mobility-service-18.5.0-x86_64-1032.iso

- Your network details, including IP address, subnet mask, gateway, DNS names, server name. Everything you need to rebuild the server and assign the correct info to it so it is functional on the network.

- Your VM configuration details: CPU Count, Memory, Disk Size Etc.

- Have all your users provisioned via GROUPS. This makes redeployment much faster. If you don't have them by groups currently, consider creating a Group in GroupWise, adding those same users to that group, and then using that Group on the new server to provision the users quickly.

- Take screenshots of all your configuration screens in the GroupWise Mobility Service dashboard. Every single page. There are 5 total:

- General | GroupWise | Device | User Source | Single Sign-On

- If you have multiple administrators that login to the GMS dashboard for administration purposes, make sure to grab the details of the configuration file so you can easily replicate it on the new system. The file is here:

- /etc/datasync/configengine/configengine.xml

- The section you need is the top part in the header in the "GW" section. Each user should be defined in a separate "Username" tag.

Execution

This is a very high-level bullet pointed overview of how you get the new server built and in full production quick.

- Shut down the old server.

- Build new SLES 15 SP5 server from scratch. Configure it with the actual IP address, gateway, DNS etc. Register it and apply all updates so it is 100% fully patched. At this point you have a base server ready to go.

- Copy the files you grabbed from the old server and use WINSCP to get them to the new server. You'll need the following:

- mobility.txt (or whatever your GroupWise Trusted App keyfile is).

- gms_mobility.pem (the SSL certificate, most likely your 3rd party SSL cert for your company).

- The GMS install ISO, for example groupwise-mobility-service-18.5.0-x86_64-1032.iso.

- Configure the firewall to allow the following ports to the GMS server: 443/tcp, 4500/tcp, 4500/udp, 8120/tcp.

- # firewall-cmd --permanent --add-port=443/tcp

- # firewall-cmd --permanent --add-port=8120/tcp

- # firewall-cmd --permanent --add-port=4500/tcp

- # firewall-cmd --permanent --add-port=4500/udp

- # firewall-cmd --reload

- Mount the GMS ISO file and run through the installer and complete the GMS installation. You mount and run it via the following commands:

- # mount -o loop groupwise-mobility-service-18.5.0-x86_64-1032.iso /mnt

- # /mnt/.install.sh

- Step through it. Note the following:

- When prompted about if you want to use a self-signed cert, say Yes. We'll replace that later but this will get you through the installer for now.

- There will be a point where it asks you for the file with the Trusted App key. You'll need to know where it's at so you can load it at this step.

- Once the installer completes successfully, login to the GMS dashboard at https://x.x.x.x:8120.

- DO NOT ADD ANY USERS OR GROUPS YET. That will just slow you down. You do that last. We have some other work to do first.

- At the Linux console, copy the SSL certificate from the old server. It should go here:

- /var/lib/datasync/gms_mobility.pem

- Don't mess with any of the other cert files in that folder.

- Go to the dashboard and configure all the settings to match what your old server used. You should have taken screenshots of the 5 config pages for reference.

- If you have multiple administrators, edit the config file that manages that aspect and paste in the directives from your old server.

- /etc/datasync/configengine/configengine.xml

- Once all the settings match, reboot the server for good measure.

- Make sure the GMS services start correctly at the Linux console.

- Make sure the services are running in the Dashboard.

- Resolve any errors or conditions. But it should be working at this point.

- Check in your browser to the Dashboard and make sure the correct certificate is loading. It should be using your 3rd party cert, not a self-signed cert.

- Go make any performance tweaks that you had before. Specifically, the Linux IO scheduler, "noatime" on the mounted partition, and the Linux swappiness. Refer to the "Misc. Performance Tuning" section of this document.

- Go to the Users tab and add the Groups in that contain the GMS users you need to provision. If you're using users instead of groups, do that here as well, but it's more tedious and time consuming.

- Once you see in the dashboard that your users accounts are being added, you're done. Just let it run and eventually the account provisioning will be complete. Once it's done users should be able to connect again just like before. It's possible that some users may have issues on their devices, but that generally only affects 1-2% of the devices. So it's manageable, and they probably just need to drop/readd the account from their phone or device.

YES but our system is HUGE and it will take FOREVER

I know. On larger systems, reprovisioning all the users will take some time. Do it after hours or over the weekend if you can. I've personally worked on GMS servers with 500 or more users and even more devices. The rebuild process, regardless of the number of users, is the same. The only thing that takes longer is when you have more users and more data. So consider that into your rebuild plan and let the process run overnight. It will be okay.

It's also possible that once users start hitting the system again, it will build up a little bit of a backlog while devices are syncing and pulling in email. This process can vary and can take longer depending on how much mail you are allowing to sync to the devices, how many devices, and how much mail is in the system in general. It's just one of the things you have to deal with and allow to happen.